Benutzer-Werkzeuge

Inhaltsverzeichnis

MUTENESS

System Concept Statement

I want to design a system, which allows mute people to speak through gestures, with the help of Midas. For this purpose, all hand gestures of the user are recorded and translated into native language in real time. Therefore, the system must be as simple as possible, and it has to support multiple gestures recognition. If the user wants to train the gestures, there must be a way to do it without setting up a lot of thresholds. Since it is very difficult to fully translate sign language, only individual gestures are translated. In sum, the system should be in a portable format, so that the user is able to use it anywhere.

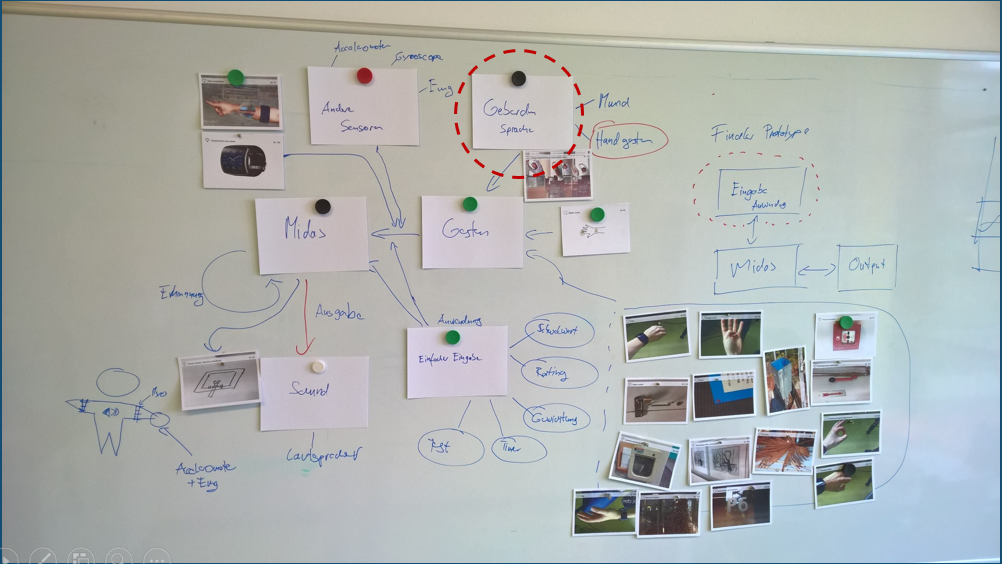

Inspiration Card Workshop

Before creating the system concept statement, I conducted an inspiration card workshop, with the leading question „What kind of gestures would you use with Midas (a gesture recognition software)?“. The result is displayed below. During the session the idea arised to use gestures form sign language. So the overall outcome of this workshop was to build a system which transformed sign language into spoken language.

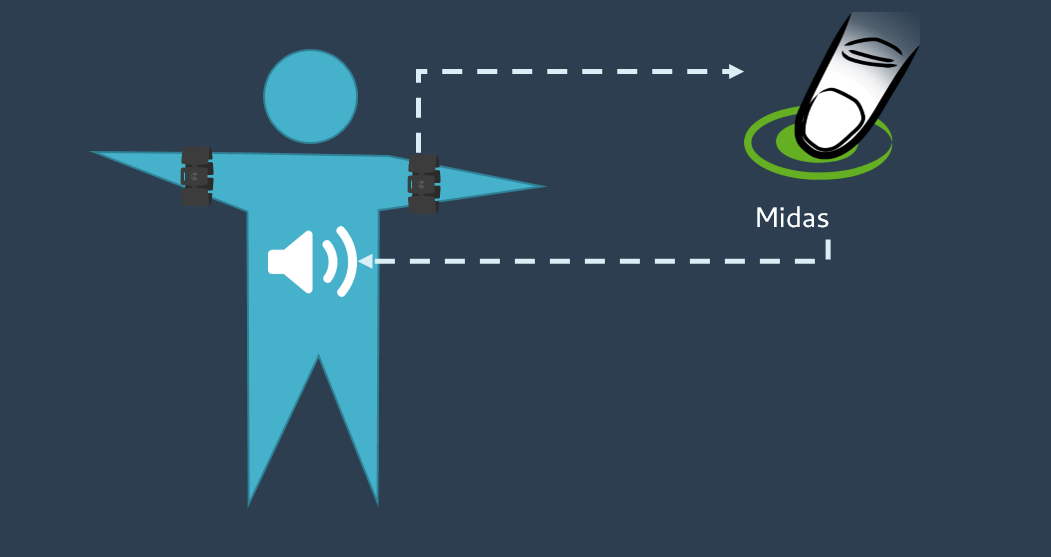

Basic Idea

The user should wear a Myo wrist band on one of his arms (preferably if he or she is right handed the right arm, or vise versa for left handed). All motions performed by the user, will be automatically streamed to the Software called Midas. Midas will try to recognize the performed gestures and translate them into an audio output. The user wears, additionally to the Myo, a speaker which emits the result of the translation by Midas.

Midas - The Software

Midas is a software that has been developed by me as part of the master project. The user is able to construct and test the signal processing pipelines with Midas. This allows gesture recognition with the aid of a Myo wirst band. All gestures have to be trained first, afterwards they can be detected by the software.

Workflow

The user first constructs a pipeline for signal processing. Therefore he can use nodes of the following three categories:

- Input

- Processing

- Output

Once this has been done, the user can start with the training of gestures. For this purpose, he executes the desired gesture, which then is recorded and stored as a template. A template is sort of a reflection of a gesture containing all important data. Now, the user can execute the trained gesture again, which can be recognized by Midas. In order to do so, the data stream is compared to the applied template.

For more information you can read my project thesis, which will be published soon.

Basic Pipeline Steps

All the data coming from the Myo band will be normalized and then inserted into a Feature Vector. In order to detect the gestures, a Dynamic Time Warping Algorithm is used.

Technical Plan

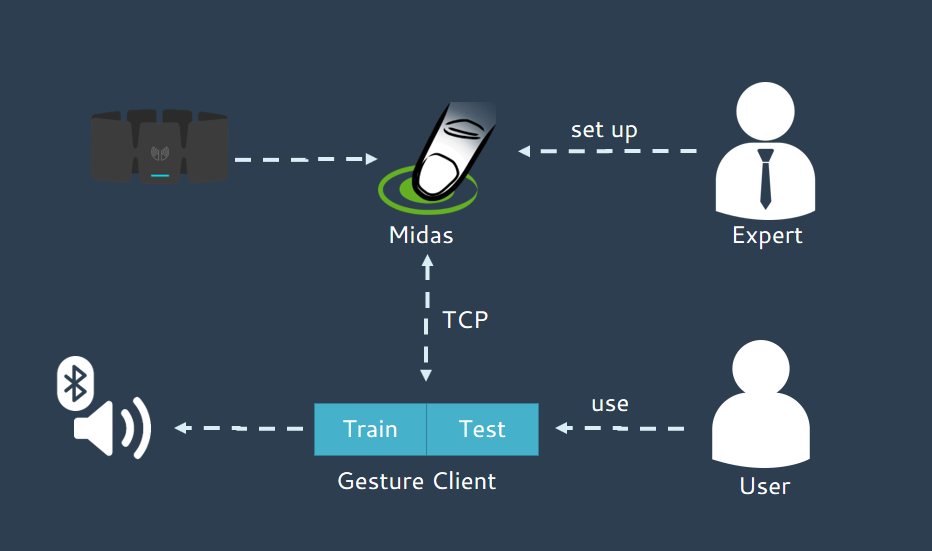

As we saw, the Midas software is a node designer, which works best for experts and not for the normal user. In the system concept statement, it says „[…] the system must be as simple as possible, and it has to support multiple gesture recognition. If the user wants to train the gestures, there must be a way to do this without setting up a lot of thresholds.“ In order to implement those statements, there had to be an alternative software for the normal user. I called this alternative software “Midas Gesture Client”. This software has to communicate with Midas and provide a simple interface to the user, in order to execute the two basic steps of the gesture recognition, namely „Train” and „Test“. In the so called “Train Mode”, the user should be able to train the gestures easily. In the “Test Mode” he can test the setting and set up some actions for the recognized gestures. For example, he can define a specific audio action, which happens if a specific gesture is performed, e.g. the audio output of „Hello“ at a waving gesture. This works only, if an expert sets up the Midas software for the use with “Midas Gesture Client”.

So here is my technical plan:

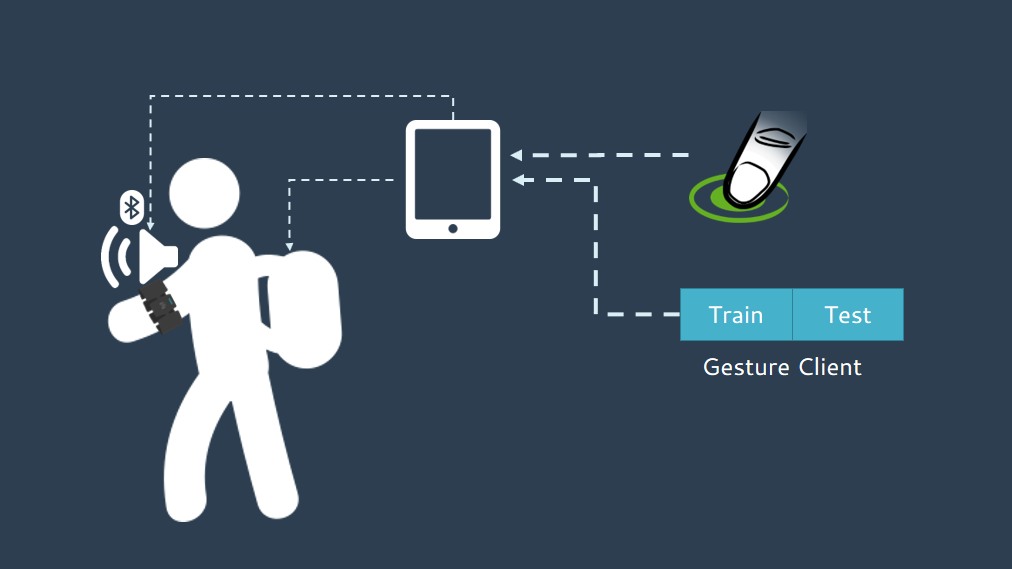

To get the system portable, this could be a solution scenario:

Midas Gesture Client

This Software should provide a view for the training of gestures, as well as a view for the test of gestures.

Training Requirements

The user should train the templates in an easy and flexible way. So I will use a timer for the recording „like ready set go“. This is necessary for gestures with two hands, where it is crucial to have both hands free. In addition, the interface should show the quality of the recorded gestures. Basically, there are two quality measurements, the first is the quality within the gestures in a group of equal gestures and the second is outside the group between different gestures.

Testing Requirements

In the testing view, the user should be able to see, which gesture is being performed and which one has been recognized. In addition, the user should have the ability to define what happens, when a gesture has been recognized.

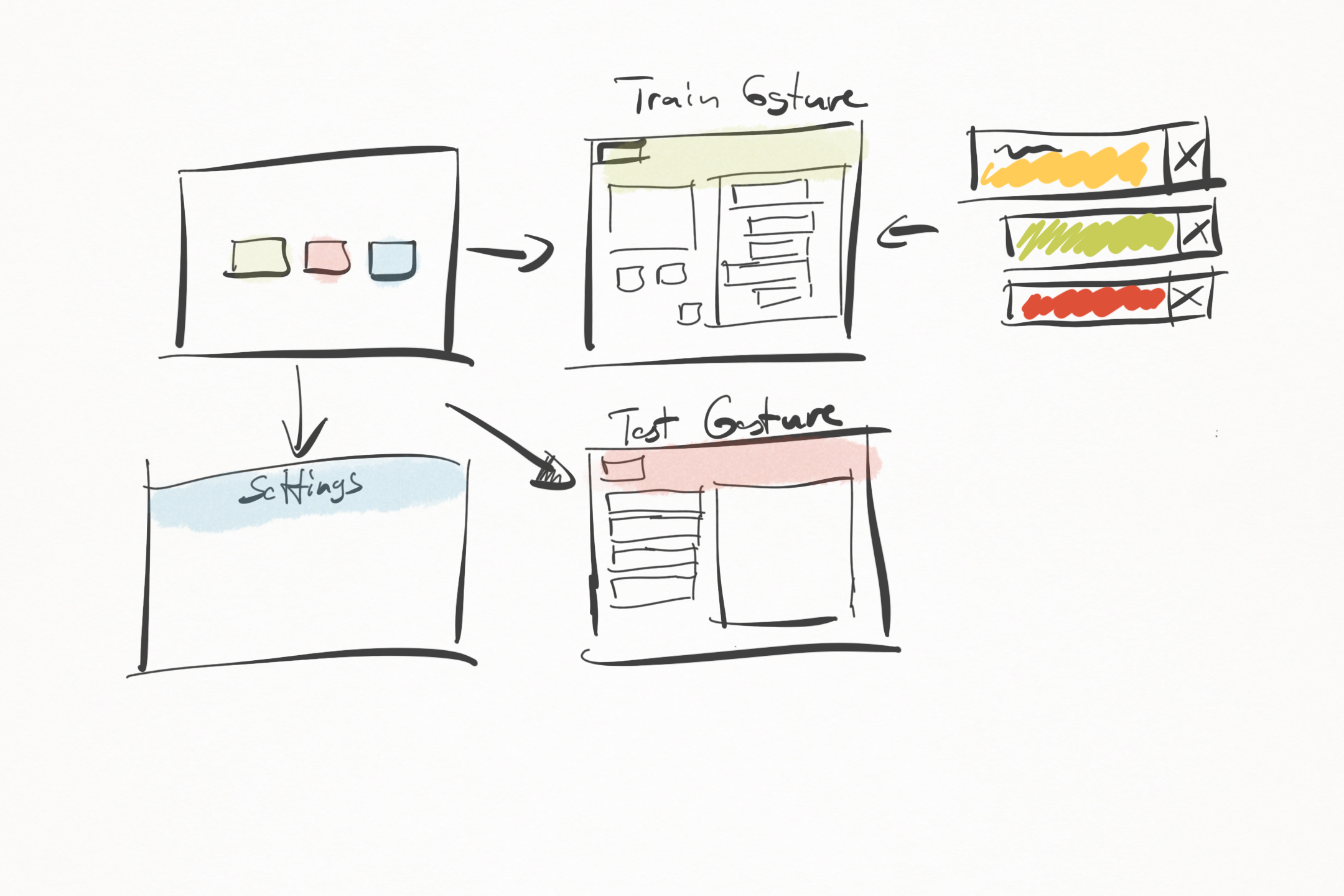

Sketches

Here are some sketches for the Midas Gesture Client:

This shows the workflow of the application. There are four screens: the main page, the train view, the test view and the settings view. The main page is needed to switch between the views.

This shows the workflow of the application. There are four screens: the main page, the train view, the test view and the settings view. The main page is needed to switch between the views.

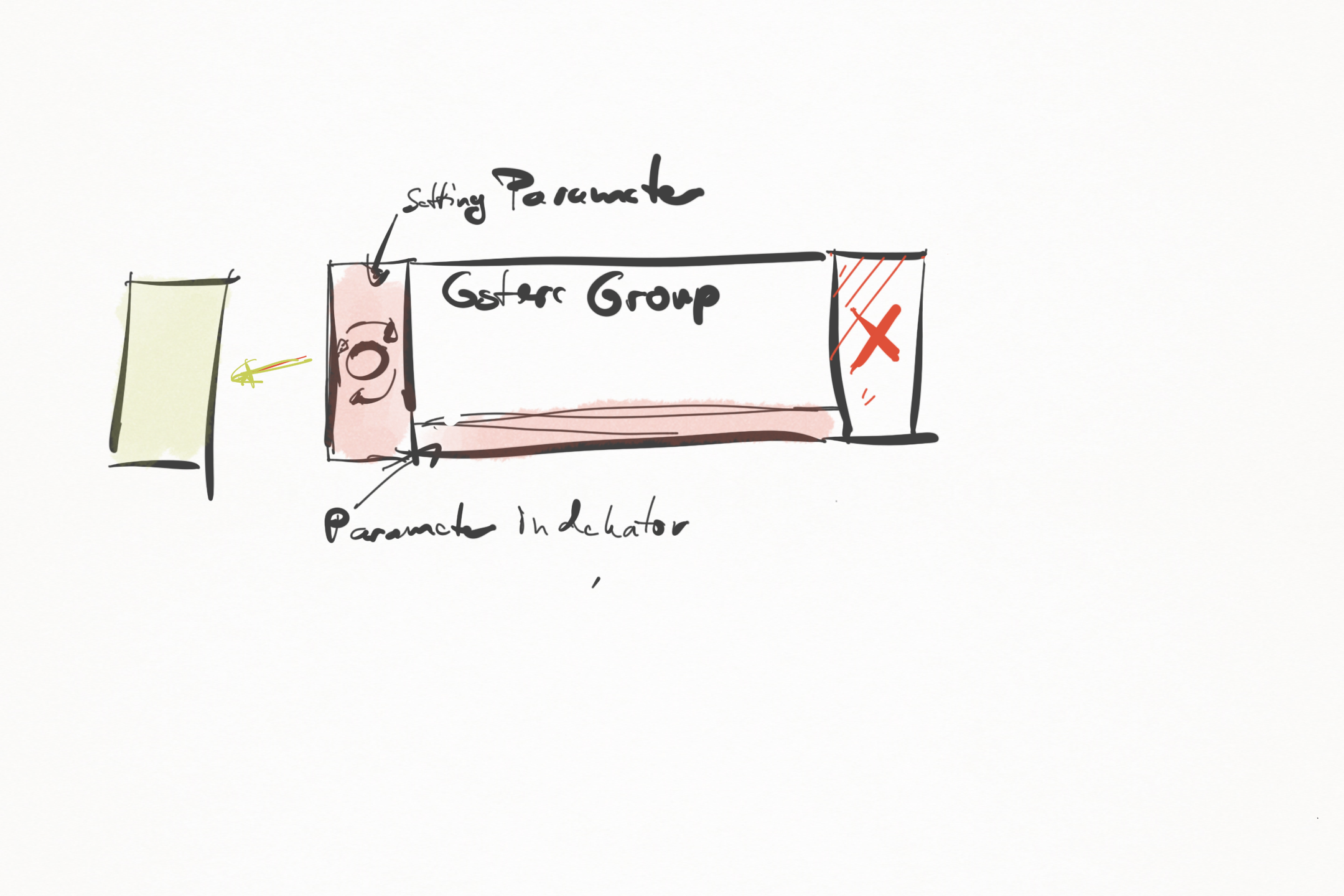

This is a template representation within the train view. It should indicate, if a template is recognized and the quality of the recorded template.

This is a template representation within the train view. It should indicate, if a template is recognized and the quality of the recorded template.

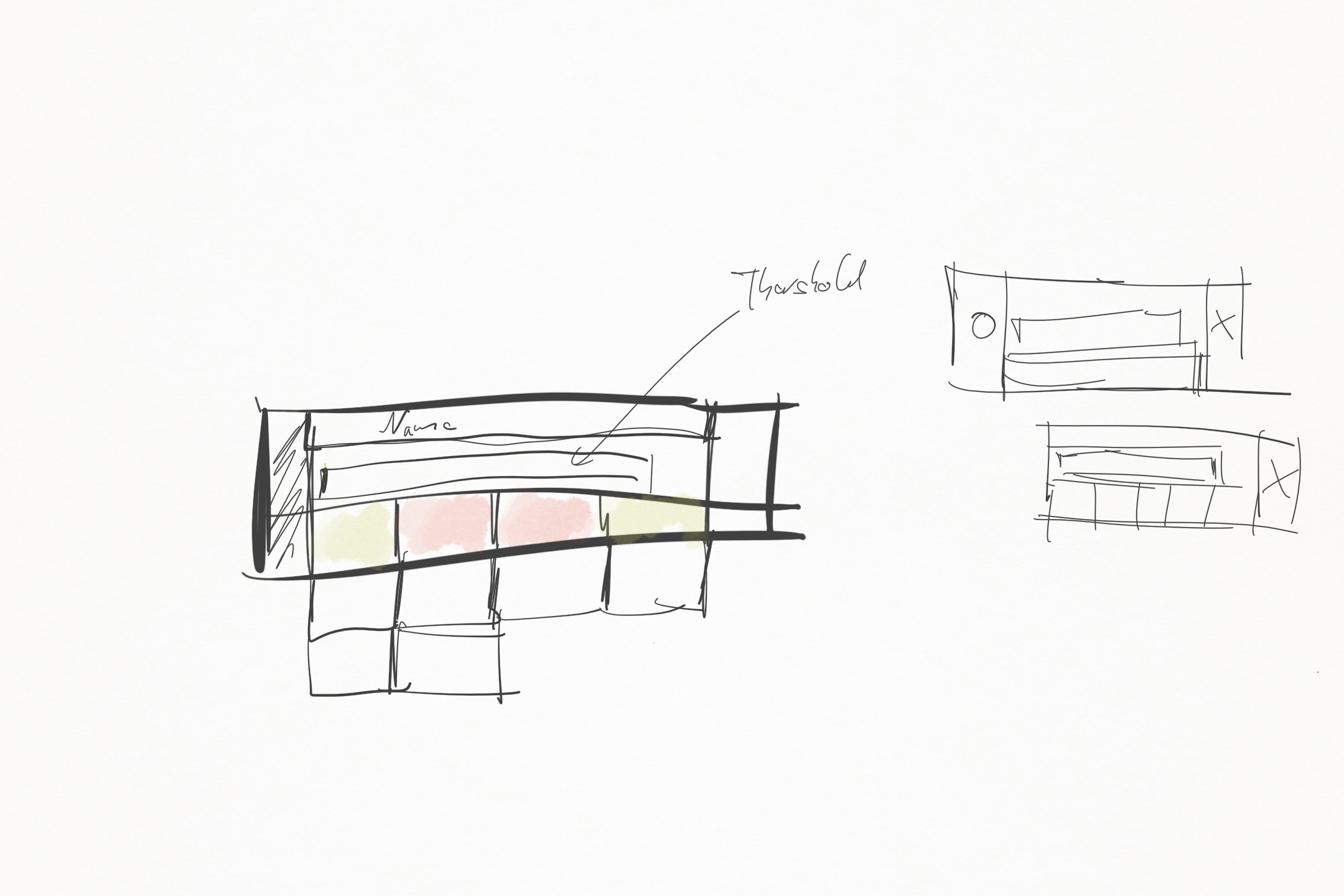

This is the second version of the template representation. It shows more information about the quality of the recording.

This is the second version of the template representation. It shows more information about the quality of the recording.

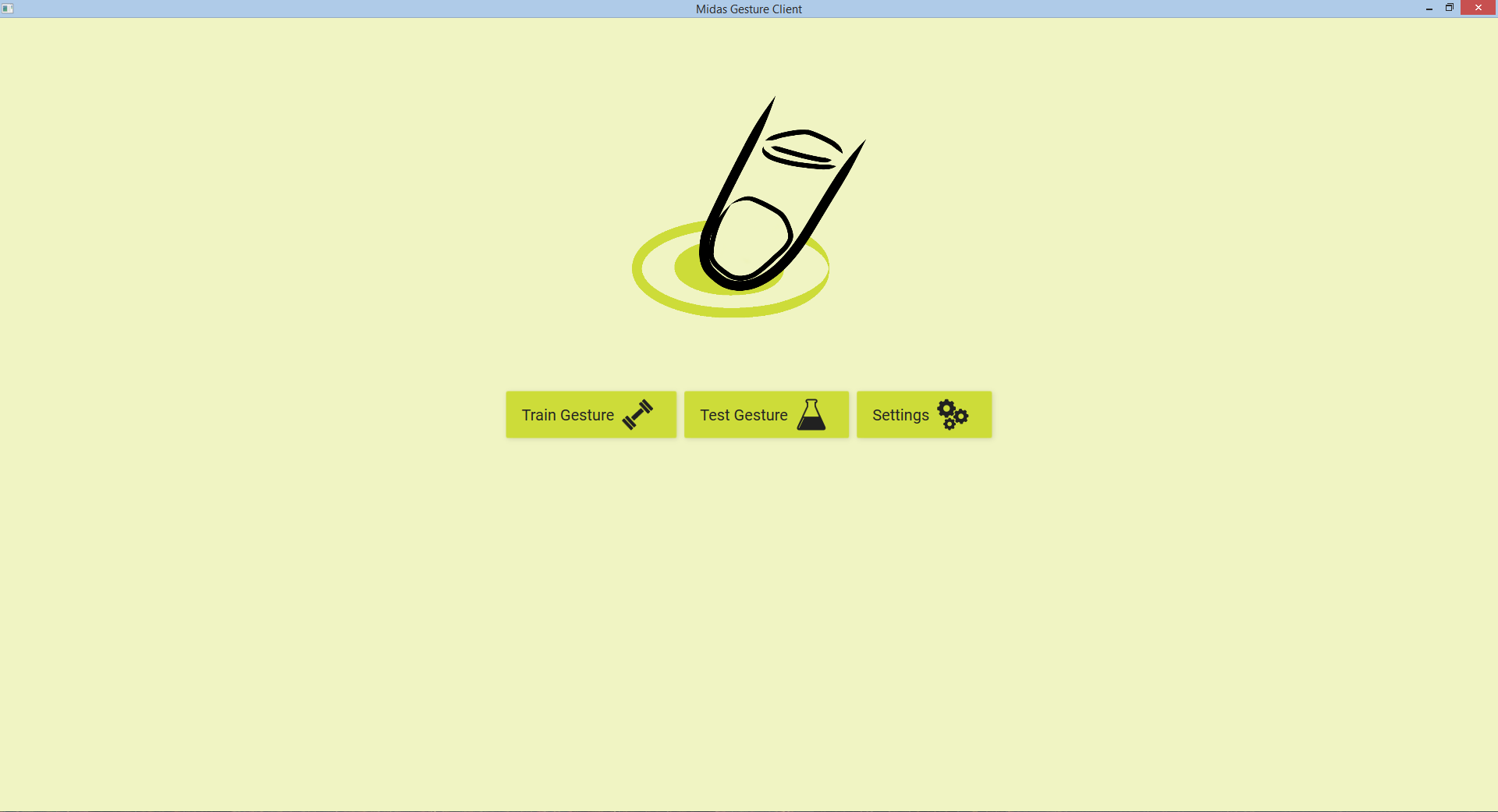

Final Version

This is the final version of the Midas Gesture Client. It starts with the main page, where the user can choose where he wants to go.

This is the train view. On the top, the user can go back to the main page or refresh the data. If the user wants to record a gesture, he has to set up the name of the template first. Please note, that all templates with the same name belong to the same group. After setting the name, he can set up the amount of time the gesture needs (Default 1 second). Now he can start recording by pressing the „Start“ button. The countdown starts from five seconds to zero and then the user can perform the gesture until „Stop“ is displayed. In addition, he can stop earlier if he presses the stop button. Alternatively to pressing the button, the user is able define some keys instead. If every step is completed, the template is displayed on the left.

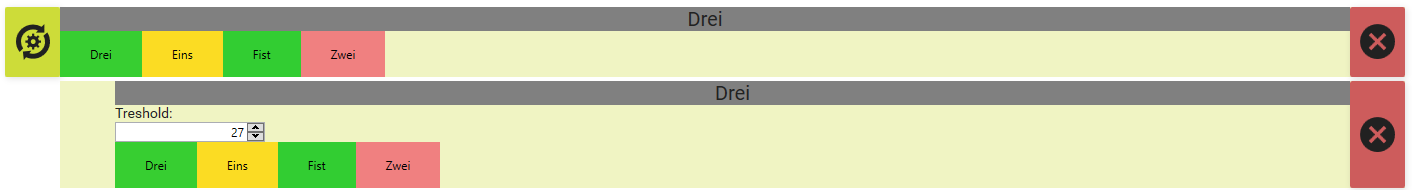

This is the template view in detail. There are two kinds of template representations: one group header (top) and the normal template representation (bottom). Let’s start with the header, that sums up all the information of the child templates. This is first of all the name of the group (in this case “Drei”) and second the quality displayed by the colored rectangles. Green signifies „perfect“, yellow is „medium“ and red signifies „bad“. This means, if something is red, the gesture could be easily misinterpreted (In this case “Drei” could be misinterpreted as “Zwei”). When a member of this group is recognized, the grey color flashes in red. This helps the user to check if the recording is right. With the button on the left, all the thresholds are calculated and the button on the right deletes the entry. The normal template representation (bottom) has in addition a field to set up the threshold manually.

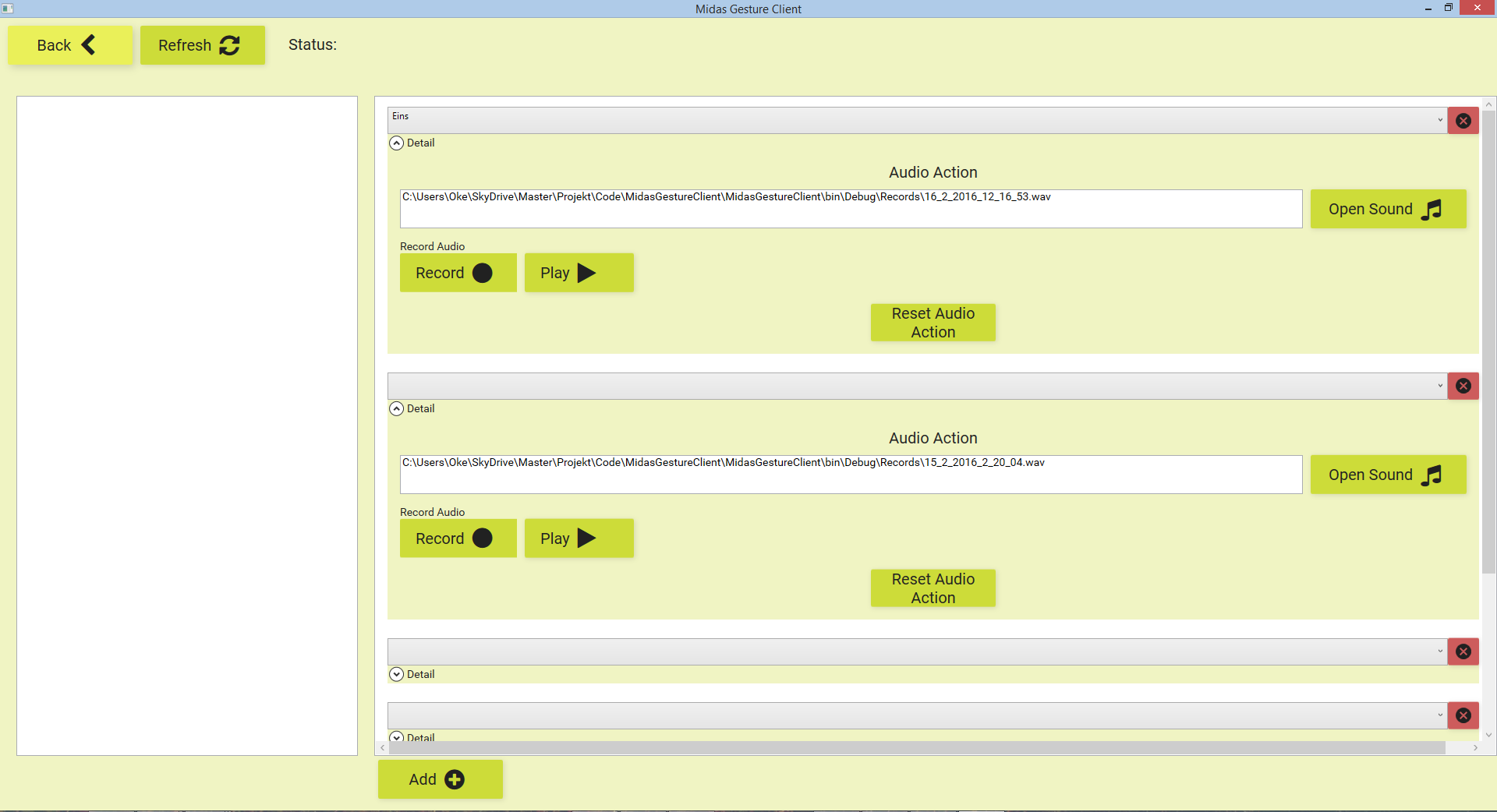

This is the test view. On the top, the user can go back to the main page, or refresh the data. On the left side, the user can see what kind of template has been recognized. On the right, he can define some action. In order to do so, the user can press the „add“ button on the bottom and with the red button he can delete the entry. With the combo box, at the top of an entry, the user is able set the gesture. Then he has two ways to define an audio action. The first one is just to open an audio file, which will be played back, if the gesture is recognized. The second way is to record some audio with a microphone.

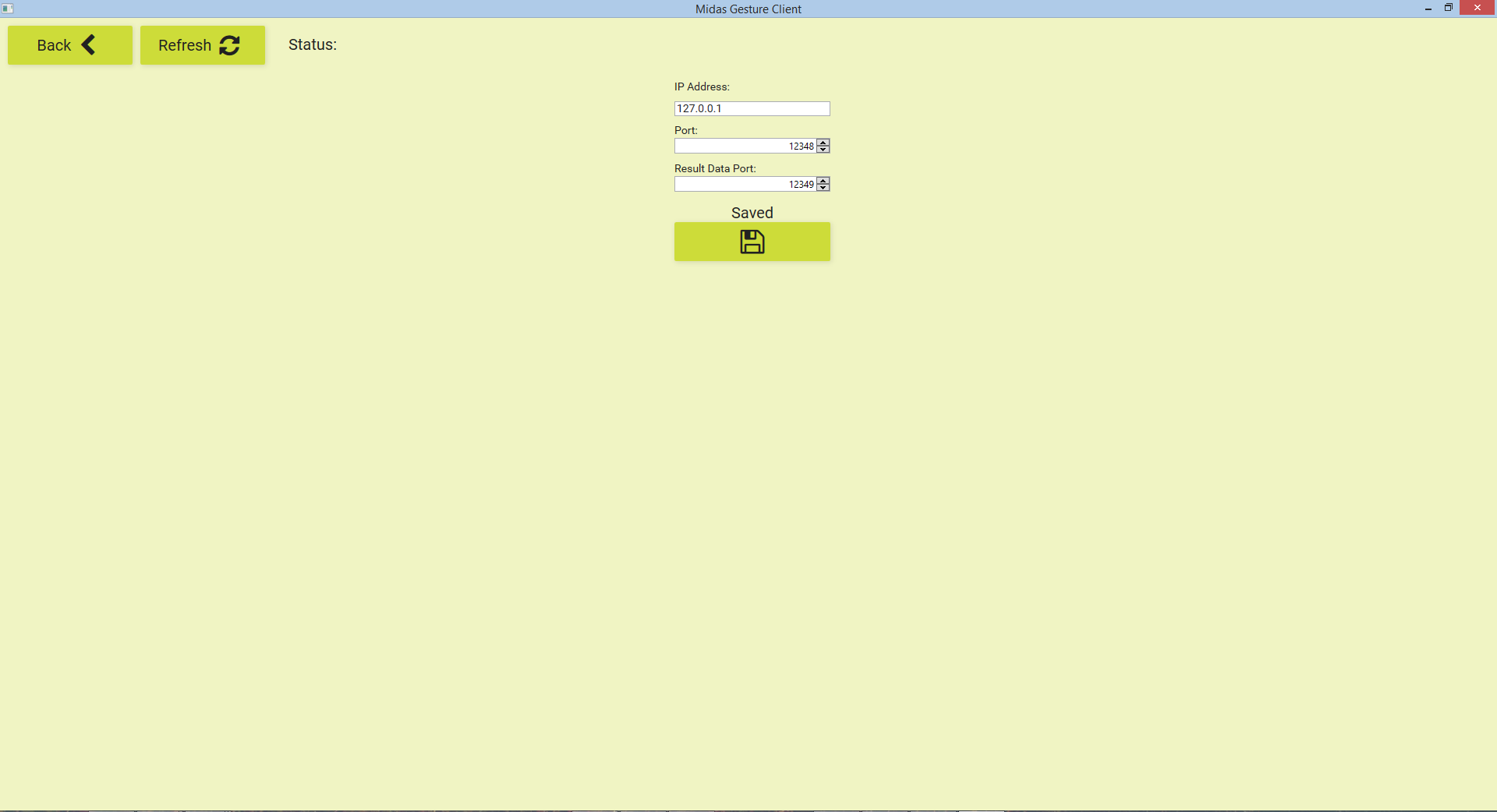

This is the settings view. The user can set up the communication parameter to communicate with Midas. Please note that all settings made in the Midas Gesture Client are automatically saved and loaded if the software is opened again.

Implementation

The Software is build in C# and uses the MVVM-Light Pattern. So for every view there is a respective view model. All in all the software contains about 1500 lines of code.

Here are some special parts:

Image Button: To easily integrate icons in in your buttons you can use a font called FontAwesome.

<Button Grid.Row="1" Grid.Column="1" HorizontalAlignment="Left" Width="160" Height="50" VerticalAlignment="Top" Margin="5,5,0,0" Command="{Binding AddCommand}" Style="{StaticResource ButtonStyle1}"> <StackPanel Margin="0" Orientation="Horizontal" VerticalAlignment="Center" HorizontalAlignment="Center"> <TextBlock TextWrapping="Wrap" Text="Add" FontFamily="Roboto" TextAlignment="Center" FontSize="20" VerticalAlignment="Center" Foreground="#FF212121"/> <TextBlock Margin="10" VerticalAlignment="Center" FontFamily="/MidasGestureClient;component/fonts/#FontAwesome" Foreground="#212121" FontSize="30" TextAlignment="Center" ></TextBlock> </StackPanel> </Button>

To construct the color coding from green to yellow to red, you can use the following code:

public SolidColorBrush GetBlendedColor(double percentage) { if (percentage < 50) return Interpolate(Brushes.LimeGreen, Brushes.Yellow, percentage / 50.0); return Interpolate(Brushes.Yellow, Brushes.LightCoral, (percentage - 50) / 50.0); } private SolidColorBrush Interpolate(SolidColorBrush color1, SolidColorBrush color2, double fraction) { double r = Interpolate(color1.Color.R, color2.Color.R, fraction); double g = Interpolate(color1.Color.G, color2.Color.G, fraction); double b = Interpolate(color1.Color.B, color2.Color.B, fraction); return new SolidColorBrush(Color.FromArgb(255, (byte)Math.Round(r), (byte)Math.Round(g), (byte)Math.Round(b))); } private double Interpolate(double d1, double d2, double fraction) { return d1 - (d1 - d2) * fraction; }

The defined protocols for speaking through Midas is:

public static void ConnectToServer(string ip, int port,int resultdataPort) { _port = port; _ip = ip; _resultDataPort = resultdataPort; FastClient.StartClient2("Connect:",ip,port); FastClient.StartClient2("Connect:", ip, resultdataPort); } public static void ReconnectData() { FastClient.StartClient2("Connect:", _ip, _resultDataPort); } public static void AskforTemplates() { FastClient.StartClient2("AskForTemplates:", _ip, _port); } public static void NewTemplateName(string name) { FastClient.StartClient2("TemplateName:"+name, _ip, _port); } public static void StartRecored() { FastClient.StartClient2("StartRecored:", _ip, _port); } public static void StopRecored() { FastClient.StartClient2("StopRecored:", _ip, _port); } public static void TrainTemplate(Guid g) { FastClient.StartClient2("TemplateTrain:"+g, _ip, _port); } public static void DeleteTemplate(Guid g) { FastClient.StartClient2("TemplateDelete:" + g, _ip, _port); } public static void NewThershold(List<TemplateThersholdEasy> l) { var msg = SerilaizeObject(l); FastClient.StartClient2("Thersholds:" + msg, _ip, _port); } private static void FastClientOnNewDataEvent(string msg) { if (msg.StartsWith("Template:")) { msg = msg.Substring("Template:".Length); //Console.WriteLine(msg); if (msg.EndsWith("</ArrayOfTemplate>")) { var serializer = new XmlSerializer(typeof (List<Template>)); var l = serializer.Deserialize(GenerateStreamFromString(msg)); var list = l as List<Template>; if (NewTemplatesEvent != null && list != null) { Application.Current.Dispatcher.Invoke(() => NewTemplatesEvent(list)); } } } if (msg.StartsWith("Result:")) { msg = msg.Substring("Result:".Length); var serializer = new XmlSerializer(typeof(Result)); var l = serializer.Deserialize(GenerateStreamFromString(msg)); if (NewResultEvent != null && l != null) { if(Application.Current != null) Application.Current.Dispatcher.Invoke(() => NewResultEvent(l as Result)); } } if (msg.StartsWith("Thersholds:")) { msg = msg.Substring("Thersholds:".Length); var serializer = new XmlSerializer(typeof(List<TemplateThersholdEasy>)); var l = serializer.Deserialize(GenerateStreamFromString(msg)); if (NewTemplateThersholdsEvent != null && l != null) { Application.Current.Dispatcher.Invoke(() => NewTemplateThersholdsEvent(l as List<TemplateThersholdEasy>)); } } }

Hardware

For the hardware I used:

- a Bluetooth Speaker http://www.taotronics.com/taotronics-tt-sk03-bluetooth-speaker.html

- PC

- (Xsens MTi-3 AHRS)(planned) https://www.xsens.com/products/mti-1-series/

Myo

The challenge of using the EMG data streams, was that the data cannot be reproduced, even if it is the same gesture. Furthermore, large variations in the data represents a comparison difficulty. So I had to find a way to avoid fluctuations. The solution is to calculate the energy that is needed for the contraction.

IMU

My vision was to get the position of the hand, in order to be able to distinguish the gestures in a better manner. One way to do this is to use an IMU unit. A very good manual for these unities is: https://www.sparkfun.com/pages/accel_gyro_guide

After long web search I found this: https://www.xsens.com/products/xsens-mvn/ With this system you are abele to track all gestures a human made. But this system cost more than 5000€ so I decide to order a cheaper developer version of this chip.

(Xsens MTi-3 AHRS) https://www.xsens.com/products/mti-1-series/ This chip calculates the orientation perfectly and it also provides a Free Acceleration signal, which is also known as Linear Accelerometer. This is an Accelerometer without impact of Earth's gravity. The special thing about this is, that it is calculated on the chip itself. It is also recorded on the chip in 1000Hz and theoretically delivered to the PC in 400Hz.

So I thought that I could calculate the position with the help of it, but it is not that easy as I thought.

I tried the following: http://www.x-io.co.uk/oscillatory-motion-tracking-with-x-imu/ http://www.x-io.co.uk/gait-tracking-with-x-imu/

Unfortunately, without success. The drift of the calculated data was to high, to get a correct position.

So I decided to end this attempt at this point, but I am sure there is a way to calculate the position, if there would be more time.

Midas Changes

Changes that have to be done in Midas to work with the Midas Gesture Client.

Communication:

For the communication between Midas and the Gesture Client, a system had to be developed that allows automatically to serialize and deserialize objects. Therefore I used the XmlSerializer, this converts the objects in XML, so they can be easily transmitted over the network. Accordingly, the following nodes in Midas were implemented:

- Object Serializer

- Object Deserializer

In addition to that, the actual TCP communications has been improved and expanded. For this purpose, a data header was built which contains the length of the data. This ensures fast and large data transfers.

Filter:

As described above, it is difficult to repeat an EMG signal exactly through a gesture. Therefore, the actual signal must be filtered in a way that the information about the strength of contraction can be determined. This is done through two filters, an absolute filter and a smoothing filter. Here, the smoothing filter is an average filter that is calculated from the previous five datasets. I also tried a frequency filter. Unfortunately, this filter showed no good performance and therefore it also supplied no good results ( tried filters : low pass, band pass ).

Accordingly, the following nodes in Midas were implemented:

- Abs Filter

- Smoothing Filter

- Frequency Filter

Some code to implement frequency filters: I use the lib MathNet.Filtering

private float[] LowPassOnline(SignalData input) { //calculating the sample rate if (_filter == null) { // var dt = (input.Data.Length / (Math.Abs(input.Time[0] - input.Time[input.Time.Length - 1]) / 1000000000.0)); if(ClalculateFrequency(input)) _filter = OnlineFilter.CreateLowpass(ImpulseResponse.Finite, MessuredFrequency, Frequency); //_filter = OnlineFirFilter.CreateLowpass(ImpulseResponse.Finite, dt, Frequency, 30); } if (_filter != null) { var r = _filter.ProcessSamples(Array.ConvertAll(input.Data, x => (double)x)); return Array.ConvertAll(r, Convert.ToSingle); } return new[] { 0f }; } private float[] HighPassOnline(SignalData input) { //calculating the sample rate //var dt = (input.Data.Length / (Math.Abs(input.Time[0] - input.Time[input.Time.Length - 1]) / 1000000000.0)); if (_filter == null) { if (ClalculateFrequency(input)) _filter = OnlineFilter.CreateHighpass(ImpulseResponse.Finite, MessuredFrequency, Frequency); //_filter = OnlineFirFilter.CreateLowpass(ImpulseResponse.Finite, dt, Frequency, 30); } if (_filter != null) { var r = _filter.ProcessSamples(Array.ConvertAll(input.Data, x => (double)x)); return Array.ConvertAll(r, Convert.ToSingle); } return new[] {0f}; } private float[] BandPassOnline(SignalData input) { //calculating the sample rate // var dt = (input.Data.Length / (Math.Abs(input.Time[0] - input.Time[input.Time.Length - 1]) / 1000000000.0)); if (_filter == null) { if (ClalculateFrequency(input)) _filter = OnlineFilter.CreateBandpass(ImpulseResponse.Finite, MessuredFrequency, Frequency,HighFrequency); //_filter = OnlineFirFilter.CreateLowpass(ImpulseResponse.Finite, dt, Frequency, 30); } if (_filter != null) { var r = _filter.ProcessSamples(Array.ConvertAll(input.Data, x => (double) x)); return Array.ConvertAll(r, Convert.ToSingle); } return new[] {0f}; }

Threshold:

To ensure, that the templates are correctly identified, it needs the right thresholds otherwise a gesture won't be recognized or recognized too often. Both are disadvantageous and for that reason, I tried to develop a method which is able to calculate these thresholds automatically. First of all, the templates of a group are compared to each other and the distances were calculated. Thereafter, the minimum, average, median, and maximum distance is determined. Through these four values, the threshold value is determined. This will work only, if enough templates have been created. Therefore, there is always the possibility to manually adjust the threshold.

Accordingly, the following nodes in Midas were implemented:

- Calculate Thresholds

- Template Manager

Result:

It may happen, that soon after a gesture was recognized, another gesture is detected. Therefore, a filter was constructed which checks the results and filters. This prevents the mistake of a second gesture being detected.

Accordingly, the following nodes in Midas were implemented:

- Result Filter

Challenges:

I realized, that I have only 17ms time to calculate all the detection functions, otherwise it is not real time any more. This was a hard challenge because after analyzing up to ten templates with the stream at once, the 17ms are not enough. So I tried to outsource all the calculation in a Thread. But the Thread Pool got bigger and bigger, which lead to the conclusion, that the calculation would be even faster, when a linear implementation was used instead of Threads. The solution in this case was to drop the frame rate, but not the resolution and sum up more calculations in only one Thread.

Future Work

- Try to calculate the correct position, with the help of an IMU unit.

- Build a mode switcher, that will be activated by performing a well recognizable gesture. This would allow to activate some templates and to deactivate some others. For example, holding a fist, will activate the mode switcher. If the first mode is active, you can recognize the gestures of mode one. After switching to mode two all the gestures of this mode can be recognized, but not the gestures of mode one.

- For avoiding wrong gesture recognition, it could be helpful to utilize a database, which allows to see with which likelihood the words occur in the detected order and maybe choosing a better alternative.